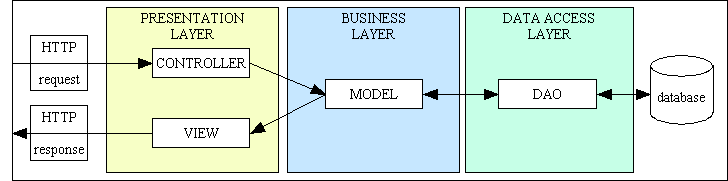

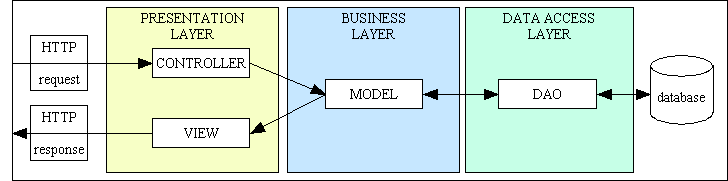

Figure 1 - MVC plus 3 Tier Architecture

According to the PHP manual the autoloading mechanism was added to the language to solve the following problem:

Many developers writing object-oriented applications create one PHP source file per class definition. One of the biggest annoyances is having to write a long list of needed includes at the beginning of each script (one for each class).

There is nothing wrong with having a single include/require statement for each class provided that the class file encapsulates all the properties and all the operations for an entity (or a service). If those properties or operations have been split across multiple class files in multiple directories as a result of using the wrong version of best practices then it is no wonder that multiple include/require statements will be needed to load all the micro-classes for that entity or service.

The main reason that some programmers need a long list of include statements is because of poor programming practices. These problems are self-inflicted and should be avoided.

Instead of dealing with the problems caused by these bad practices I prefer to use a more pragmatic approach and avoid the problems altogether by following a different set of practices:

classes subdirectory.When I first read about autoloaders I realsied that they would not wotk for me as they translate the "_" (underscore) character in class names to the "/" (slash) directory separator in path names, so if I supply a name such as class_name it will be converted to class\name. I have a separate class for each table in my database, and the class name is the same as the table name, and as I use lowercase and underscores in all of my table names (none of this CamelCase crap for me) the use of any autoloader would prove to be totally unworkable.

Some criticisms from other developers show that they do not understand why autoloaders were added to the language. Take the following as examples:

... you admitted to not using an autoloader which means you've personally memorized the file paths to all you classes. It would take someone else a while to do such a memorization, so on that factoid alone I can determine your framework, however it is set up, would not be easy to learn.

I do not have a long list of file paths for my classes. I have the INCLUDES directory for all framework classes, and a separate CLASSES subdirectory for each subsystem. These are all identified on the INCLUDE_PATH directive, so I have nothing to memorise.

tony_marston404Well clearly you do! You used the fact that require_once lines are needed for classes as an example of DI needing more lines of code. Those repeated require_once lines are a perfect example of where an autoloader can solve the issues you had. Besides, I already answered this in detail with an example, using your own codebase that solves the problem.

I do not use an autoloader for the simple reason that I do not have the problem for which an autoloader is the solution. Besides, an autoloader cannot do anything that cannot already be done using include_path.

I do not have to write a long list of includes at the beginning of each script. I never require more than one include for each class file. While there may be a small number of Controller scripts which access more than one class, those are reusable scripts which I wrote 20 years ago, so I do not have to write them all over again. I certainly do not see any need to go back and rewrite them to use an autoloader when I can already achieve what needs to be done using the INCLUDE_PATH directive. When I need to load a dependency into an entity I use my singleton class, and I see no reason to change something that works unless there are proven benefits. I'm afraid that satisfying your ridiculous demands does not fall into the category of "good reason".

Although I do not use autoloaders anywhere in my own code I sometimes have to install a 3rd-party library, such as PhpSpreadsheet (formerly PHPExcel), symfony/mailer (formerly SwiftMailer) and php-imap. It is only after looking at the source code for these libraries that I realised where an autoloader could be useful. Why? Because of the enormous number of files which these libraries use. Take a look at the following counts:

| library | files | directories |

|---|---|---|

| PHPExcel | 215 | 40 |

| PhpSpreadsheet | 519 | 70 |

| SwiftMailer | 161 | 23 |

| symfony/mailer | 58 | 12 |

| php-imap | 63 | 9 |

Anybody who thinks its a good idea to split the code for a single entity across that number of files and directories needs their head examined. It is only because of this self-inflicted "problem" that an autoloader is required in order to replace the need to insert a huge number of include/require statements so that the entity's class can be instantiated into a fully-functional object. By following the principle of Encapsulation and placing ALL the data for an entity and ALL the operations that can be performed on that data into a single class I do not need more than one include/require statement to load all the code for any class.

Why do these libraries require so many files? Because their developers are following the wrong version of "best practices". For this I blame Robert C. Martin who started the rot in his paper The Single Responsibility Principle (SRP) which starts with the following:

This principle was described in the work of Tom DeMarco and Meilir Page-Jones. They called it cohesion. They defined cohesion as the functional relatedness of the elements of a module. In this chapter we'll shift that meaning a bit, and relate cohesion to the forces that cause a module, or a class, to change.

SRP: The Single Responsibility PrincipleA CLASS SHOULD HAVE ONLY ONE REASON TO CHANGE.

I don't know about you, but to my mind it is difficult to see how "functional relatedness" and "reason to change" are connected. By attempting to "shift that meaning a bit" what he actually did was to shift it a lot and lead people up the wrong path and in the wrong direction. I found his statement so vague and confusing that I stopped further reading of that principle. I was not the only one who was confused as legions of other programmers tried to interpret what it meant, which caused them to come up with some very weird mis-interpretations followed by some very weird implementations. The most common interpretation of "too many responsibilities" was "doing too much" which they decided could only be measured by counting the lines of code (LoC). In their tiny minds if a class had more than X methods, and each method had more than Y lines of code (where the numbers X and Y vary depending on the IQ of the person you are talking to) then it surely must be doing too much and therefore should be split into smaller pieces. This situation got so bad that Uncle Bob had to produce a follow-up article in which he tried to answer the following question:

What defines a reason to change?

Some folks have wondered whether a bug-fix qualifies as a reason to change. Others have wondered whether refactorings are reasons to change.

The only sensible answer to this question which I found later in his article was:

This is the reason we do not put SQL in JSPs. This is the reason we do not generate HTML in the modules that compute results. This is the reason that business rules should not know the database schema. This is the reason we separate concerns.

Notice that while discussing the topic of responsibilities he switched to using the term concerns, thereby confirming that SRP and SoC mean exactly the same thing.

He repeated this explanation yet again in Test Induced Design Damage? in which he said:

How do you separate concerns? You separate behaviors that change at different times for different reasons. Things that change together you keep together. Things that change apart you keep apart.

GUIs change at a very different rate, and for very different reasons, than business rules. Database schemas change for very different reasons, and at very different rates than business rules. Keeping these concerns [GUI, business rules, database] separate is good design.

Notice that in the above two quotes he is saying that the words "responsibility" and "concern" mean exactly the same thing, so they are NOT separate principles with different meanings which are applied in different stages of your coding. Notice also that if you look at those three areas - GUI, business rules, and database access - in the terms of "functional relatedness" (on which the whole idea of cohesion is based) you should see that the GUI is dealing with HTML forms, the database is dealing with SQL queries, while the business rules are entirely separate from the other two. Keeping these separate is good design.

It was only at this point that I realised that what he was actually talking about was the spitting image of the 3 Tier Architecture with its Presentation (UI) layer, Business layer and Data Access layer, which I had used as the basis for my framework from the very start. This meant that I had been following his principle after all, but I didn't know it as his description was so badly phrased it was unintelligible.

However, Uncle Bob then went and muddied the waters even more by publishing an article called One Thing: Extract till you Drop which encouraged developers to keep on extracting until it was impossible to extract anything more. This may sound like a good idea to the uninitiated, but to some people, myself included, it goes much too far. Like any idea it may be beneficial up to a point, but you have to know when to stop. You have to be able to see when enough is enough and anything more is too much.

Unfortunately when it came to implementing this idea some programmers, especially those who create libraries which you install using Composer, completely misread the article and went too far. Uncle Bob's sample code clearly shows the creation of more and smaller methods within the same class, but some idiot somewhere got it into his thick head that each of those methods should actually go into its own separate class. This misinterpretation was compounded even further by the idea that each of those subordinate classes should be placed in a subdirectory which is named after the class which is calling that subordinate class. This leads to a large number of small classes which are scattered across a hierarchy of directories and subdirectories which makes it extremely difficult to locate the piece of code which performs a particular activity. I have personally found a bug in two of these libraries, and I have been unable to fix the faulty code for the simple reason that I can't even find it. Even if I do find it I then have to find where it is being called from, and tracing backwards through a hierarchy of tiny methods in tiny classes is enough to make a saint swear.

By following the artificial rule Extract till you Drop what programmers fail to realise is that they are actually breaking other more fundamental principles, that of encapsulation and not achieving high cohesion (which is the opposite of low cohesion). Once you have identified an entity with which you will be working, such as a database table, you should create a single class for that entity with enough functionality to maintain its state and process its business rules. You need only separate out the code which is not functionally related, such as GUI logic and data access logic. If you continue any further than that with this functional decomposition you will transform this cohesive whole into a collection of fragments which is more difficult for the human mind to follow. The code is also slower to execute as it has to load and instantiate a much higher number of classes.

When I look at the file counts for those 3rd-party libraries I am absolutely horrified. As far as I am concerned a library for sending emails should have a single class for the email entity plus a separate class for each transport mechanism, which is a service. Anything less is not enough while anything more is too much. I have found bugs in two of those libraries, but although I have worked out what is wrong I cannot fathom where it is going wrong for the simple reason that I cannot trace a path through that spaghetti code.

When you use spl_autoload_register() to register the user-defined function that will perform the directory search and load the required file you need to be aware that this function will be called every time a new file needs to be loaded. I have stepped through one of these 3rd party libraries with my debugger just to see what happens, and I was appalled to see exactly how many instructions were being executed each time. For one library I counted 40 instructions, so if you multiply that by the number of files in that library you should realise the overhead of executing that many instructions just to load that many files.

Before I started programming with PHP in 2002 I had been involved in designing and building enterprise applications for 20 years in two different languages, COBOL and UNIFACE. During this time I encountered many different programming styles, some good and some not-so-good, and learned only to follow those practices which produced software which is both cost effective and maintainable. By "maintainable" I mean "readable", so that a person looking at the code for the first time can quickly discover how it was structured so that they can follow a path through that structure. Code which does not have a discernible structure is called spaghetti code.

When teaching myself PHP and how to take advantage of its object oriented capabilities the first question I had to answer was what "things" do I encapsulate?

I was already very familiar with writing programs which manipulated the contents of database tables and so were centered around those tables. UNIFACE, my previous language, was based on the creation of components which dealt with "entities" in its Application Model where each entity mapped directly to a table in the database, so I saw no reason why I shouldn't create a separate class for each database table.

Note that not all objects will be entities (which have state) as some of them will be services (which do not have state), as explained in Object Classification.

For a definition of anemic micro-classes please refer to Anemic Domain Model by Martin Fowler.

The next decision was to decide what properties and methods to put inside each class. According to what I had read about encapsulation it meant The act of placing data and the operations that perform on that data in the same class

. The "data" part was obvious as it meant the data which belonged on that table. The "operations" part was also a no-brainer for me as I already knew that each and every table is subject to exactly the same set of Create, Read, Update and Delete (CRUD) operations, so I created a method to correspond with each of these operations. I also took the words "in the same class" to mean that ALL the data and ALL the operations should be in the SAME class, which meant that the idea of spreading either of those two across multiple micro-classes would be against the principles of OOP and should therefore be avoided like the plague. Similarly the idea of creating a class which was responsible for more than one table was also unacceptable.

Note that when I say "all the operations that perform on that data" I am excluding those operations which belong outside of the Business layer. I had already become familiar with and become a fan of the 3-Tier Architecture with its separate Presentation, Business and Data Access layers, so my code was built around this architecture from the very beginning, as shown in the Figure 1 below. Note that after I split my Presentation layer into two separate components I realised that I also an implementation of the Model-View-Controller design pattern.

Figure 1 - MVC plus 3 Tier Architecture

Note that this diagram does not mean that there is only one version of each of those components. The actual numbers are as follows:

This meant that I was already matching what Uncle Bob wrote about SRP when he said Keeping these concerns [GUI, business rules, database] separate is good design

, so as far as I am concerned any more separation would be excessive. Note that I split my Presentation layer into two parts as I had already decided to produce all HTML output using a templating engine. This meant that each of my table classes resided in the Business layer and was also the Model in the Model-View-Controller design pattern.

Note that in the above diagram all the Models are entities while the Controllers, Views and DAOs are services. This is explained in Object Classification.

An enterprise application can be comprised of a number of different subsystems or business domains which are fully integrated with each other, so I developed the RADICORE framework to help me build and run each of those subsystems as quickly as possible. Each subsystem has its own database with its own collection of files which exist in its own directory in the file system, as shown in this directory structure. This means that all files can only exist in one of two places:

<subsystem>/classes directories for files pertaining to that subsystem.As I started development of my framework several years before autoloaders were added to the language I became familiar with the INCLUDE_PATH directive which specifies a list of directories through which the include, require, fopen(), file(), readfile() and file_get_contents() functions will search when looking for files. As the number of directories in which a file may exist is quite small it does not take much effort to maintain this list in the current INCLUDE_PATH.

As I maintain all the operations for an entity or a service in a single class file instead of a myriad of small files in a multitude of directories and sub-directories this means that I can load each of those class files with a single include or require statement where the list of directories to search through is maintained in the INCLUDE_PATH. This leads me to the following revelation:

Question: How many include statements does the developer need to write?

Answer: None.

This is because the vast majority of them either exist within a pre-written framework component, such as the individual controller scripts or the abstract table class, or they exist in a component script which is generated by the framework. In either case the developer does nothing. If an additional class is required within the processing of a table class then this can be achieved using the singleton function which again uses the INCLUDE_PATH.

I dislike the idea of using an autoloader simply because it is a solution to a problem which should not exist in the first place. By spreading logic across a large number of micro-classes the developer is violating some of the fundamental principles of OOP, that of encapsulation and high cohesion. The idea that operations which are closely related should be grouped together in the same module is so obvious to me that I cannot understand why anyone would want to do anything different. The only excuse would be that they were taught to do it that way, in which case their teacher should be held responsible.

I developed my code years before autoloaders ever existed, and because I adopted a different version of "best practices" I never had the problem for which they were the solution. I could achieve everything I needed by using the INCLUDE_PATH directive instead of writing a custom function which came with a large overhead in processing cycles. If I had changed my code to implement a solution which I did not need and which would have produced zero benefit I would have violated the YAGNI principle.

As far as I am concerned those developers who insist on using autoloaders to solve the problem of "too many include statements" have created the problem themselves with their mistakes in their coding style. These mistakes are as follows:

The end result of this ridiculous exercise is a large collection of anemic micro-classes which are spread over a hierarchy of directories in the file system. Instead of trying to solve the problem for which autoloaders appear to be the solution they should avoid he mistake of creating the problem in the first place. They obviously have not heard of the old saying Prevention is better than Cure otherwise they would be doing that already. Instead of making the mistakes listed above these developers should learn to follow some fundamental practices:

This means that when I am looking for the logic in one of those areas, either to fix a bug or make a change, I can look inside a single object instead of sifting through a collection of fragments.

By constantly listening to bad advice without having the ability to spot better alternatives I fear than these followers will end up being nothing more than code monkeys, copycats, buzzword programmers and cargo cult programmers who are incapable of having an original thought.

Here endeth the lesson. Don't applaud, just throw money.

These are reasons why I consider some ideas on how to do OOP "properly" to be complete rubbish: