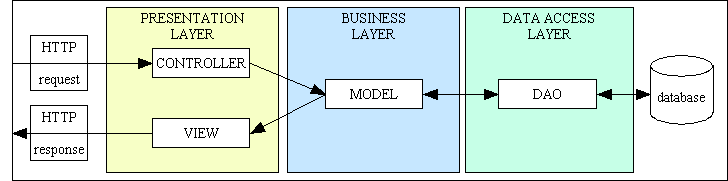

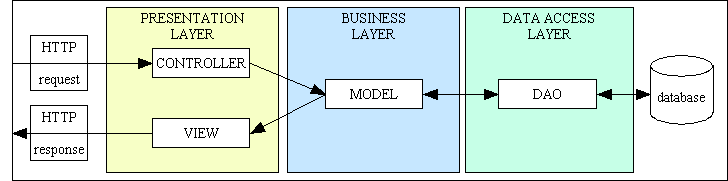

Figure 1 - MVC plus 3 Tier Architecture

According to a lot of OO purists every programmer MUST follow the SOLID and the GRASP principles otherwise it will be the end of civilisation as we know it.

I have already written my opinion of the SOLID principles, so I think it only fair that I give the same treatment to the GRASP principles.

Let me start by saying that of all the types of software that could be written the only type that concerns me is the web-based database application. The RADICORE framework was designed specifically for applications which have HTML documents at the front end, a relational database at the back end, and software in the middle to handle all the business rules. The architecture it uses is a combination of the 3-Tier Architecture and the Model-View-Controller (MVC) design pattern, as shown in Figure 1 below:

Figure 1 - MVC plus 3 Tier Architecture

A more detailed description is also available at RADICORE - A Development Infrastructure for PHP.

As explained in Object Classification this framework recognises only two categories of object - Entities, which are stateful, and Services, which are stateless. The objects in Figure 1 above are categorised as follows:

Note that all services are pre-written and supplied with the framework. The initial versions of Models are generated by the framework from the database structure.

There is a separate class in the business/domain layer for each table in the database which inherits all of its standard boilerplate code from an abstract table class which is also supplied by the framework. This enables the use of the Template Method Pattern so that custom code can be inserted into any concrete table class using any of the available "hook" methods.

Before jumping into my first object oriented language I asked myself the question What is this thing called OOP? Why is it different from other paradigms?

The simplest answer I found went along the lines of:

Object Oriented Programming means writing programs which are oriented around objects. Such programs can take advantage of Encapsulation, Inheritance and Polymorphism to increase code reuse and decrease code maintenance.

By using this definition as my guide I endeavoured to create code with more reusable modules as the more reusable code I had at my disposal the less code I had to write to get the job done, and the sooner I could get the job done the more productive I would be. I have subsequently encountered certain principles and practices which would require more code to get the job done than I currently employ, so forgive me if I do not react favourably to such "advice".

I have found numerous different descriptions of what this term is supposed to mean, and I don't follow any of them.

The controller pattern is a common tool in object-oriented software design. Though most people might only associate the controller with the ancient architecture of the MVC (Model-View-Controller - Which is far too often used for cases it absolutely doesn't fit, but that's a story for a different day), the controller is a construct of itself.

As the name implies, the controller's work is to 'control' any events that are not directly related to the user interface. But controlling does not mean implementing. The controller consists of barely any logic. It is merely a mediator between the presentation layer and the (core) logic.

To say that MVC is an ancient architecture which is often used for cases it absolutely doesn't fit shows that the author has never worked on a web application which uses that architecture. While it may have originally been designed for bit-mapped displays in a Graphical User Interface (GUI) which responds to mouse events it can be used just as well when the user interface is an HTML document which transmits HTTP requests, as shown in Figure 1 above. I have never seen any description of MVC which says that the display MUST be bit-mapped and can ONLY respond to mouse events, so saying that it is not a good fit for web applications is complete and utter nonsense.

The Controller principle assigns the responsibility of handling system events to a dedicated class, which manages and coordinates the system's behavior. This principle helps maintain a clean separation between the presentation and domain layers.

Example: In a web application, a UserController class can handle user-related events, such as registering and logging in, delegating the actual processing to other classes.

The idea of having a separate controller for each domain object (the Model in MVC) which would perform similar actions to every other controller would require duplicate code to perform those similar actions. It would be better to have a fixed set of controllers which can perform their actions on any Model.

- Deals with how to delegate the request from the UI layer objects to domain layer objects.

- when a request comes from UI layer object, Controller pattern helps us in determining what is that first object that receive the message from the UI layer objects.

- This object is called controller object which receives request from UI layer object and then controls/coordinates with other object of the domain layer to fulfill the request.

- It delegates the work to other class and coordinates the overall activity.

We can make an object as Controller, if

- Object represents the overall system (facade controller)

- Object represent a use case, handling a sequence of operations (session controller).

In my implementation the Controller does not receive a request from a UI layer object as the Controller *IS* the UI layer object.

The controller pattern assigns the responsibility of dealing with system events to a non-UI class that represents the overall system or a use case scenario. A controller object is a non-user interface object responsible for receiving or handling a system event.

Problem: Who should be responsible for handling an input system event?

Solution: A use case controller should be used to deal with all system events of a use case, and may be used for more than one use case. For instance, for the use cases Create User and Delete User, one can have a single class called UserController, instead of two separate use case controllers. Alternatively a facade controller would be used; this applies when the object with responsibility for handling the event represents the overall system or a root object.

The idea that a Controller sits between the User Interface (UI) and the business/domain layer is not one that I share. In my framework the Controller is the object within the Presentation (UI) layer which receives the HTTP request. It then calls one or more methods on one or more Model objects in the business/domain layer after which it calls the View object, which also resides within the Presentation (UI) layer, which extracts all the data from the Model(s) and transforms it into the format required by the user.

I do not have any Controllers which handle all the use cases for an entity (Model) in the business/domain layer. Each Controller handles a single use case for an unknown entity where the identity of the entity is supplied at run time using a component script, thus demonstrating my use of polymorphism and dependency injection. Instead of a separate Controller for each entity I have a separate Controller for each Transaction Pattern where each pattern can be applied to any entity.

All my Controllers are use case controllers as I have never recognised the need for other types.

Why should a I create a whole class just to execute the new keyword?

A creator is a class that is responsible for creating instances of objects. Larman defines the following cases as B is the creator of A:

- B aggregates A objects

- B contains A objects

- B records instances of A objects

- B closely uses A Objects

- B has the initializing data that will be passed to A when it is created

So an example for a creator could be a library that contains books. In that case, the library would be the creator of books, even though this sounds syntactically weird as natural language.

That is not how it works in a database. Library and Book are separate entities, so have separate tables in the database. It is not necessary to go through the Library Table in order to create a Book, so it should not be necessary to go through the Library object in order to create a Book object. All it requires in a database is for the Book table to have a foreign key to a record on the Library table.

The Creator principle involves assigning the responsibility of creating an object to a class that uses the object, has the necessary information to create it, or aggregates the object. This principle ensures a clear separation of concerns and simplifies object creation.

Example: In an e-commerce application, the Order class might be responsible for creating OrderItem objects, as it uses and aggregates these objects.

I agree with the idea that the responsibility of creating an object is given to a class that uses the object

, which means that the Controller is responsible for creating the Model before it uses it. When dealing with a parent-child relationship it is the Controller which creates and communicates with both the parent and child objects.

Example:

- Who creates an Object? Or who should create a new instance of some class?

- "Container" object creates "contained" objects.

- Decide who can be creator based on the objects association and their interaction

- Consider VideoStore and Video in that store.

- VideoStore has an aggregation association with Video. I.e, VideoStore is the container and the Video is the contained object.

- So, we can instantiate video object in VideoStore class

I disagree. In a parent-child relationship it is not necessary to go through the parent table in order to access the child table, it is only necessary to use the primary key of the parent to access the child via its foreign key. All that is necessary is to ask the parent object for the primary key of its current database record, then use that primary key to construct the foreign key which you can then pass to the child object. You do not need to remain inside the parent object to access the child object.

The creation of objects is one of the most common activities in an object-oriented system. Which class is responsible for creating objects is a fundamental property of the relationship between objects of particular classes.

Problem: Who creates object A?

Solution: In general, Assign class B the responsibility to create object A if one, or preferably more, of the following apply:

- Instances of B contain or compositely aggregate instances of A

- Instances of B record instances of A

- Instances of B closely use instances of A

- Instances of B have the initializing information for instances of A and pass it on creation.

I treat each table in the database as an independent object and therefore have a separate class for each table. Each class therefore has the responsibility for carrying out the business rules for a single database table, thus adhering to the Single Responsibility Principle (SRP). I do not have any composite or aggregate objects where one object is responsible for creating or using other objects. There are no such things as composite or aggregate tables in a database as each table is a standalone entity which is subject to the same rules as every other table.

If a table is related to other tables, either as a parent or a child, then I have specialised Controllers which deal with that relationship. For example, for the parent classes above (Library, Order and Video Store) I would have a family of forms based on the LIST1 pattern while for the child classes (Book, OrderItem and Video) I would have a family of forms based on the LIST2 pattern. The LIST2 pattern forces the user to select a row from the parent table before it will allow access to the child table.

Note that in the LIST2 pattern the parent and child tables are treated as separate objects. The Controller first reads a row from the parent table to obtain its primary key, then translates this into the foreign key of the child before activating the child. I never have code inside a parent class to handle any associations/relationships with other classes. I do not have custom code within any parent object to handle communications with any of its children, instead I use generic code with the Controller that deals with parent-child relationships.

I never have to use a factory pattern to create and configure instances as each table class has only one configuration, and it configures itself within its constructor.

Indirection isn't a pattern but an idea. It is also of no use of its own, only in combination with other ideas such as low coupling. The goal behind indirection is to avoid direct coupling.

To pick up an earlier example the controller for example is a kind of indirection between the UI and the logic. Instead of coupling the UI directly to the logic, the controller decouples this layer and thereby comes with all the advantages of indirection.

The Controller does NOT decouple the Presentation/UI layer from the business/domain layer for the simple reason that the Controller already exists in the Presentation layer, as shown earlier in Figure 1 above. The Controller communicates directly with the Model.

Indirection introduces an intermediate class or object to mediate between other classes, helping to maintain low coupling and simplify interactions. This principle can be applied through various patterns, such as the Facade or Adapter patterns.

I disagree completely. Indirection does NOT help to maintain low coupling, nor does it simplify interactions. Coupling only exists when one module calls another via a method signature, and adding indirection does not eliminate or even reduce the possibility of the ripple effect due to a change in one of the modules. All it does is increase the number of method calls.

- How can we avoid a direct coupling between two or more elements.

- Indirection introduces an intermediate unit to communicate between the other units, so that the other units are not directly coupled.

- Benefits: low coupling

- Example: Adapter, Facade, Observer

Direct coupling is not an issue if the method call already exhibits low coupling. If two modules are already tightly coupled then adding indirection will do nothing but replace one tightly-coupled call with two tightly-coupled calls.

The indirection pattern supports low coupling and reuses potential between two elements by assigning the responsibility of mediation between them to an intermediate object. An example of this is the introduction of a controller component for mediation between data (model) and its representation (view) in the model-view-controller pattern. This ensures that coupling between them remains low.

Problem: Where to assign responsibility, to avoid direct coupling between two (or more) things? How to de-couple objects so that low coupling is supported and reuse potential remains higher?

Solution: Assign the responsibility to an intermediate object to mediate between other components or services so that they are not directly coupled. The intermediary creates an indirection between the other components.

Indirection does not change a tightly-coupled connection to a loosely-coupled connection, it merely replaces a single tightly-coupled connection with two tightly-coupled connections.

I don't know about the youngsters of today, but when I was at school I was taught the the shortest distance between two points was a straight line, and the introduction of more points in between led to a journey that was longer than it could be. If the goal of indirection is to avoid direct coupling then the result is indirect coupling and not decoupling as many would suggest. If two modules are coupled, such as when a Controller (module C) calls a Model (module M) there there is a dependency between the two modules. It would be correct to say that C is dependent on M as it requires the services of M to complete its task, but it would be wrong to say that M is dependent on C as there is no call from M to C.

If you introduce an intermediate object X into the chain of calls so that instead of C-to-M you have C-to-X-to-M you should see that you have doubled the number of calls, but C is still dependent on M. The dependency my be indirect, but it is still there. Increasing the number of calls is not a good idea, as stated in an article called Structured Design published in 1974 in the IBM Systems Journal Vol. 13, No 2 which said:

The fewer and simpler the connections between modules, the easier it is to understand each module without reference to other modules. Minimizing connections between modules also minimises the paths along which changes and errors can propagate into other parts of the system, thus eliminating disastrous 'Ripple Effects', where changes in one part causes errors in another, necessitating additional changes elsewhere, giving rise to new errors, etc.

Note the words fewer and simpler

. Refer to low coupling for a detailed description of "simpler".

Another principle that falls into that area is the information expert. Sometimes it is just called the expert or the expert principle. The problem that should be solved with the information expert is the delegation of responsibilities. The information expert is a class that contains all the information necessary to decide where responsibilities should be delegated to.

You identify the information expert by analyzing which information is required to fulfill a certain job and determining where the most of this information is stored.

If this is supposed to explain the idea of encapsulation then it is an abject failure. In order to create an object in the business/domain layer you first need to identify every entity which will be of interest to your application, something with data and operations that can be performed on that data. You then create a class for each entity with properties for its data and methods for its operations. In a database application these entities are called tables, and while the data in each table may be different all the operations - Create, Read, Update and Delete (CRUD) - are the same. It is important to note that each class should contain ALL the data and ALL the operations for that entity.

The Information Expert principle states that responsibilities should be assigned to the class with the most knowledge or information required to fulfill the responsibility. This principle promotes encapsulation and ensures that each class is responsible for managing its data and behavior.

Example: In a payroll system, the Employee class should be responsible for calculating its salary, as it has all the necessary information, such as base salary, hours worked, and bonuses.

This sounds like that after I have created a class which has properties which represent a table's structure and methods which implement the CRUD operations then it is OK to include code to implement the business rules associated with that data. That to me is so obvious I can hardly believe that it needs to be identified as a principle with its own name. To me it would be illogical to create a class for an entity and then put the business rules for that entity in a different class.

- Given an object o, which responsibilities can be assigned to o?

- Expert principle says - assign those responsibilities to o for which o has the information to fulfill that responsibility.

- They have all the information needed to perform operations, or in some cases they collaborate with others to fulfill their responsibilities

Example:

- Assume we need to get all the videos of a VideoStore.

- Since VideoStore knows about all the videos, we can assign this responsibility of giving all the videos can be assigned to VideoStore class.

- VideoStore is the information expert.

This principle is about creating a class for each entity which is responsible for holding the business rules for that entity. It is NOT concerned with how or where that class is instantiated into an object as that is covered by the Creator principle.

Problem: What is a basic principle by which to assign responsibilities to objects? Solution: Assign responsibility to the class that has the information needed to fulfill it.

Information expert (also expert or the expert principle) is a principle used to determine where to delegate responsibilities such as methods, computed fields, and so on.

Using the principle of information expert, a general approach to assigning responsibilities is to look at a given responsibility, determine the information needed to fulfill it, and then determine where that information is stored.

This will lead to placing the responsibility on the class with the most information required to fulfill it.

My interpretation of this principle, in line with the above definitions, is that "responsibility" equates to code while "information" equates to data. In a database application each database table is responsible for a different set of data which is defined in that table's structure, so each table should have its own class. The operations which can be performed on that data - Create, Read, Update and Delete (CRUD) - should also be included in that class. This then results in a separate concrete class for each database table which is responsible for the data held within that table as well as the operations which can be performed on that data.

Some of my critics have pointed out this arrangement results in classes where the code to perform the CRUD operations is duplicated, but this is not the case. Any code which could be duplicated is actually shared by virtue of the fact that it is inherited from a collection of common table methods which are defined in an abstract table class. This means that each concrete class contains only the non-sharable code which can be inserted into the numerous pre-defined "hook" methods.

Coupling exists when one module calls another, and can be described as being either tight or loose depending on how closely those two modules are connected. If module A can only call module B, or module B can only be called from module A, then those two modules are tightly coupled. If the two modules are interchangeable then they are loosely coupled.

Loose coupling helps maximise code resuability while tight coupling does exactly the opposite.

Low or loose coupling is the idea of creating very few links between modules. In GRASP it is an inter-module concept. Meaning it describes the flow between different modules, not the flow inside the modules.

Together with cohesion, it is the main reason upon which you make global design decisions such as "Where do we pack this module or function to?".

Low coupling is another concept that supports interchangeability. By making very few dependencies, very few code must be changed in case of a change.

Note here that the phrase Low or loose coupling is the idea of creating very few links between modules

means that attempting to use indirection to de-couple one module from another is actually a bad idea as it replaces a direct dependency with an indirect dependency and increases the number of inter-module calls, therefore it increases the number of places which may need to be changed due to the ripple effect.

Low Coupling involves minimizing dependencies between classes to reduce the impact of changes and improve maintainability. This principle encourages independent and modular classes that can be easily modified without affecting other parts of the system.

Example: Instead of having a direct dependency between the Order class and the ShippingService class, we can introduce an interface, ShippingProvider, which reduces coupling and allows for easier substitution of shipping services.

I agree with the first statement but disagree with the example. In my main ERP application all orders are handled by tasks in the ORDER subsystem while shipments are handled by tasks in the SHIPMENT subsystem. These two subsystems are separate with the only link between them is that each item to be shipped has a foreign key which refers back to an entry on the ORDER-ITEM table. An ORDER_ITEM can exist without a SHIPMENT, but a SHIPMENT cannot exist without an ORDER_ITEM.

- How strongly the objects are connected to each other?

- Coupling - object depending on other object.

- When depended upon element changes, it affects the dependent also.

- Low Coupling - How can we reduce the impact of change in depended upon elements on dependent elements.

- Prefer low coupling - assign responsibilities so that coupling remain low.

- Minimizes the dependency hence making system maintainable, efficient and code reusable

Two elements are coupled, if

- One element has aggregation/composition association with another element.

- One element implements/extends other element.

I do not agree that coupling exists with aggregation or composition or between a superclass and a subclass. It only exists when one module calls another. If the method call on an object is tied to a single object then that is an example of tight coupling, but if the identity of that object can be switched to another then that is an example of loose coupling.

Coupling is a measure of how strongly one element is connected to, has knowledge of, or relies on other elements. Low coupling is an evaluative pattern that dictates how to assign responsibilities for the following benefits:

- lower dependency between the classes,

- change in one class having a lower impact on other classes,

- higher reuse potential.

The above description echoes my own interpretation.

Coupling is a measure of how closely software components are connected to each other. It determines the extent to which changes in one component can affect other components within a system. Low/loose coupling indicates a loosely connected system where changes to one component have minimal impact on others. Conversely, high/tight coupling signifies a tightly connected system where changes in one component can ripple across the entire system, often leading to unintended consequences.

Here is an example which demonstrates tight coupling:

<?php require_once 'classes/person.class.inc'; $dbobject = new Person(); $dbobject->setUserID ( $_POST['userID'] ); $dbobject->setEmail ( $_POST['email'] ); $dbobject->setFirstname ( $_POST['firstname']); $dbobject->setLastname ( $_POST['lastname'] ); $dbobject->setAddress1 ( $_POST['address1'] ); $dbobject->setAddress2 ( $_POST['address2'] ); $dbobject->setCity ( $_POST['city'] ); $dbobject->setProvince ( $_POST['province'] ); $dbobject->setCountry ( $_POST['country'] ); if ($dbobject->updatePerson($db) !== true) { // do error handling } ?>

The code above exists within a Controller which calls a Model called Person. It is tightly coupled because of these obvious facts:

Here is an example which demonstrates loose coupling:

<?php require_once 'classes/$table_id.class.inc'; // $table_id is provided by the previous script $dbobject = new $table_id; $result = $dbobject->updateRecord($_POST); if ($dbobject->errors) { // do error handling } ?>

You should notice the following differences:

$table_id.Cohesion describes the contents of a module. The degree to which the responsibilities of a single module/component form a meaningful unit. The degree of interaction within a module. It forms the basis of the Single Responsibility Principle (SRP), Separation of Concerns (SoC) and the 3 Tier Architecture.

in GRASP high (functional) cohesion is an intra-module concept. Meaning it describes the flow inside a certain module not the flow between modules.

The main reason behind high cohesion is the idea of reducing complexity. Instead of building large classes that have many functions that have few in common and are hard to maintain, the goal should be to create classes that fit exactly their defined purpose.

As well as avoiding the creation of large classes containing unrelated functions it is also supposed to avoid the splitting up of related functions into a collection of small classes as this would mean searching through many classes to find the right function

High Cohesion means grouping related responsibilities together within a single class to make it easier to understand, maintain, and reuse. This principle ensures that each class has a single, focused purpose.

Example: In a blogging platform, the Blog class should only be responsible for managing blog-related activities, such as adding and removing posts, and not for handling user authentication.

- How are the operations of any element are functionally related?

- Related responsibilities in to one manageable unit.

- Prefer high cohesion

- Clearly defines the purpose of the element

- Benefits

- Easily understandable and maintainable.

- Code reuse

- Low coupling

High cohesion is an evaluative pattern that attempts to keep objects appropriately focused, manageable and understandable. High cohesion is generally used in support of low coupling. High cohesion means that the responsibilities of a given set of elements are strongly related and highly focused on a rather specific topic. Breaking programs into classes and subsystems, if correctly done, is an example of activities that increase the cohesive properties of named classes and subsystems. Alternatively, low cohesion is a situation in which a set of elements, of e.g., a subsystem, has too many unrelated responsibilities. Subsystems with low cohesion between their constituent elements often suffer from being hard to comprehend, reuse, maintain and change as a whole.

My framework is based on a combination of the 3 Tier Architecture and the Model-View-Controller (MVC) design pattern as shown in Figure 1 above. The responsibilities are apportioned as follows:

This correctly separates each area of logic into its own component. Each component in one layer can be used with any component in another layer because of the power of polymorphism.

The only description of polymorphism which made sense to me was same interface, different implementation

, but is this context "interface" means "method signature" and not that artificial construct which uses the keywords "interface" and "implements".

Polymorphism could be frankly translated as "something that imitates many forms". And that might be a concise but useless explanation to someone who has never heard of polymorphism.

Anyone who ever took a programming class is most likely familiar with polymorphism. But, it can be a tricky question to define it sensefully.

The idea again is to reduce complexity by imagining that objects follow simple and similar rules. Consider the following example: You have three objects A, B and C. B has the same methods and attributes but has an additional method X. C has the same methods and attributes as B, but an additional method Y.

polymorphism is the concept of disassembling objects and ideas into their most atomic elements and abstracting their commonalities to build objects that can act like they were others. Not only to reduce complexity but also to avoid repetitions.

The above description is next to useless. Polymorphism occurs when the same method signature is available in more than one object, which then allows the object which contains that signature to be swapped with another object to obtain a different result. The mechanism for performing this swap is called Dependency Injection.

Polymorphism involves using inheritance and interfaces to enable different classes to implement the same behavior or operation. This principle allows for more flexibility and easier code maintenance by enabling the system to handle varying implementations without modifications to the existing code.

Example: In a graphics application, the Shape interface defines the common behavior for different shapes, such as Circle and Rectangle, allowing them to be rendered in a consistent manner.

Inheritance involves the use of the keyword extends and not the word implements for an interface as the method signatures have to be duplicated and not shared

- How to handle related but varying elements based on element type?

- Polymorphism guides us in deciding which object is responsible for handling those varying elements.

- Benefits: handling new variations will become easy.

"Element type" is much too vague as it can be interpreted in different ways. In a database application which accesses dozens or even hundreds of database tables does the class for each individual table constitute a separate "type", or does the fact that they are all database tables mean that they all share the same type which is "database table"?

According to the polymorphism principle, responsibility for defining the variation of behaviors based on type is assigned to the type for which this variation happens. This is achieved using polymorphic operations. The user of the type should use polymorphic operations instead of explicit branching based on type. Problem: How to handle alternatives based on type? How to create pluggable software components? Solution: When related alternatives or behaviors vary by type (class), assign responsibility for the behavior - using polymorphic operations - to the types for which the behavior varies. (Polymorphism has several related meanings. In this context, it means "giving the same name to services in different objects".)

This description is far too vague and imprecise, which means that it can lead novice programmers down the wrong path. Polymorphism requires that the same method signature exists in multiple classes so that the class from which an object is instantiated can be varied, in which case the method call will be the same but the implementation (i.e. the code within that method) will be different. The question "how to handle alternatives based on type" should be answered with Dependency Injection.

Since a major motivation for object-oriented programming is software reuse it should follow that a good programmer should create as much reusable/sharable software as possible. Implementing an interface does not produce any reusable code, so nothing is inherited. True inheritance comes from extending an existing class so that the contents of the superclass can be shared by each subclass. Extending from a concrete class to create a different concrete class can cause problems, so the best way to avoid such problems is to only ever inherit from an abstract class, as explained in Designing Reusable Classes which was published in 1988 by Ralph E. Johnson & Brian Foote.

Inheriting from an abstract class has the added benefit of allowing the implementation of the Template Method Pattern so that standard boilerplate code in the abstract superclass can be augmented with custom code in "hook" methods in each concrete subclass. This is precisely why I created an abstract table class with a set of common table methods which is then shared by every concrete table class (Model). This means that each concrete table class need only contain whatever "hook" methods are necessary to add custom business rules or behaviour.

This also goes by the name Open-Closed Principle (OCP) which has the following description:

software entities should be open for extension, but closed for modification

I have always found this descriptions to be very confusing and imprecise, and due to the lack of examples which are capable of demonstrating its merits I have chosen to ignore it completely.

The protected variations pattern is a pattern used to create a stable environment around an unstable problem. You wrap the functionality of a certain element (class, interfaces, whatever) with an interface to then create multiple implementations by using the concept of polymorphism. Thereby you are able to catch specific instabilities with specific handlers.

An example of the protected variations pattern is working with sensory data, such as a DHT22, a common temperature and humidity sensor often used for Raspberry Pi or Arduino). The sensor is doing its job pretty well, but sometimes it will say the temperature just rose by 200 celsius or won't return any data at all. These are cases you should catch using the protected variations pattern to avoid false behavior of your program.

Can you give me an example of an unstable problem? How is this different from an ordinary problem? Your example of a temperature sensor is useless when all I do is write enterprise applications with HTML and the front end and SQL at the back end.

Protected Variations involve encapsulating variations and changes in the system behind stable interfaces to minimize the impact of changes and increase the system's robustness. This principle can be applied by using abstractions, such as interfaces or abstract classes, to hide implementation details.

Example: In a payment processing system, the Payment gateway interface protects the system from changes in the implementations of different payment methods, like CreditCardPayment or PayPalPayment.

Are you saying that if a class performs a function, but the implementation of that function could vary, that I should put each variation into its own class behind a stable interface so that I can add a new variation without having to change any of the existing classes? If so this sounds like the issue raised by Robert C. Martin in the "Copy" program. Why is there no reference to polymorphism if this is an important aspect of the principle?

- How to avoid impact of variations of some elements on the other elements.

- It provides a well defined interface so that the there will be no affect on other units.

- Provides flexibility and protection from variations.

- Provides more structured design.

Again there is no reference to polymorphism.

The protected variations pattern protects elements from the variations on other elements (objects, systems, subsystems) by wrapping the focus of instability with an interface and using polymorphism to create various implementations of this interface.

Problem: How to design objects, subsystems, and systems so that the variations or instability in these elements do not have an undesirable impact on other elements? Solution: Identify points of predicted variation or instability; assign responsibilities to create a stable interface around them.

This seems to be hinting that you should create an interface or abstract class with a stable API and move all the variations into concrete subclasses. This could eventually lead to an implementation of the Template Method Pattern with its support for invariant and variable methods, but there is no mention of this. This is a serious omission as it leaves too much to the imagination, which is not good enough for something which is supposed to be a teaching aid to novice programmers.

To add to the confusion there are two different variations of this principle:

- A module will be said to be open if it is still available for extension. For example, it should be possible to add fields to the data structures it contains, or new elements to the set of functions it performs.

- A module will be said to be closed if [it] is available for use by other modules. This assumes that the module has been given a well-defined, stable description (the interface in the sense of information hiding).

This appears to say that if I have a Model class which is available for use from a Controller then I am forbidden to make changes to the Model's properties. Experience has taught me that in the lifetime of a database table it is not impossible to want to add, remove or change columns, so I cannot be constrained by this description.

During the 1990s, the open-closed principle became popularly redefined to refer to the use of abstracted interfaces, where the implementations can be changed and multiple implementations could be created and polymorphically substituted for each other.

In contrast to Meyer's usage, this definition advocates inheritance from abstract base classes. Interface specifications can be reused through inheritance but implementation need not be. The existing interface is closed to modifications and new implementations must, at a minimum, implement that interface.

The second description is a better match for my framework as I have two areas where polymorphism allows me to add a new implementation by creating a new class which reuses a pre-defined set of method signatures instead of having to add new functionality to an existing class. These areas are:

To reach low coupling and high cohesion, it is sometimes necessary to have pure fabrication code and classes. What is meant by that is that this is code that does not solve a certain real-world problem besides ensuring low coupling and high cohesion. This is often achieved by using factor classes.

Pure Fabrication involves creating an artificial class to fulfill a specific responsibility when no suitable class exists. This principle aims to maintain high cohesion and low coupling by avoiding the assignment of unrelated responsibilities to existing classes.

Example: In a file storage application, the FileStorage class can be created to handle file storage operations, separating it from the core business logic.

- Fabricated class/ artificial class - assign set of related responsibilities that doesn't represent any domain object.

- Provides a highly cohesive set of activities.

- Behavioral decomposed - implements some algorithm.

- Examples: Adapter, Strategy

- Benefits: High cohesion, low coupling and can reuse this class.

A pure fabrication is a class that does not represent a concept in the problem domain, specially made up to achieve low coupling, high cohesion, and the reuse potential thereof derived (when a solution presented by the information expert pattern does not). This kind of class is called a "service" in domain-driven design.

As explained in Object Classification this type of functionality cannot be classed as an entity which resides in the business/domain layer, so it is more like a service which can be called at any time to perform a specified function. Note that this should not be used as a bucket for a mixture of utility methods which don't belong anywhere else as the principle of high cohesion dictates that a class should only contain methods which share in the same responsibility. The RADICORE framework has two such classes:

Note that it would not be good practice to put all this functionality into a single utility class as data validation and date conversion are not related in any way.

Although the intent of the numerous programming principles, practices, patterns and rules may be laudable, I have found that the wording they use is sometimes too ambiguous and imprecise and therefore subject to misinterpretation which then leads to implementations of questionable quality and efficacy. Since a major motivation for object-oriented programming is software reuse it should follow that only those principles and practices which promote the production of reusable software should be eligible for nomination as "best". It should also follow that out of the many possible ways in which any one of these principles may be implemented, the only one which should be labelled as being the "best" should be the one that produces the "most" reusable software. There is no such thing as a single perfect or definitive implementation which must be duplicated as different programmers may devise different ways to achieve the desired objectives by using a combination of their own intellect, experience and the capabilities or restrictions of the programming language being used. Remember also that "best" is a comparative term which means "better than the alternatives", but far too many of today's dogmatists refuse to even acknowledge that any alternatives actually exist. If any alternatives are proposed their practitioners are automatically labelled as being heretics who should be ignored, or perhaps vilified, chastised, or even burned at the stake.

As you should see from the above content there are many different views as to the actual meaning of each of the nine parts of GRASP, and along with each different meaning there is a different "suggested" implementation. My own implementations may be different from any of those put forward simply because I have found what I believe is a better way, usually simpler and with less code, to achieve the desired result.

I do not follow those principles or design patterns which I deem to be inappropriate, such as those devised for a strictly typed language when the one that I'm using is dynamically typed, or those which are not relevant in web-based database applications. Those that I do follow I will implement to the best of my ability based on my interpretation on what the principle or pattern is supposed to mean or supposed to achieve. My implementation is always results-oriented and not rules-oriented. I am a pragmatist, not a dogmatist.

When I say that the results which I achieve are better than any others which I have seen this is not just an idle boast, it is backed up by facts which can be measured. If you don't believe me then I suggest you take this challenge which shows how I can create a family of six forms to maintain the contents of a database table in under 5 minutes without writing a single line of code - no PHP, no HTML, no SQL. The resulting user transactions will perform all the common processing, up to and including primary data validation, which leaves the developer with nothing to do but insert the non-standard or custom code. Remember that the more reusable code you have at your disposal the less code you have to write, and there is nothing smaller than no code at all.

Here endeth the lesson. Don't applaud, just throw money.

These are reasons why I consider some ideas on how to do OOP "properly" to be complete rubbish: