This industry is pre-occupied with reuse.I disagree. Encapsulation was not the only thing that OOP brought to the world as on its own it does not have much value. Its true value appears when it is coupled with Inheritance and Polymorphism. Encapsulation enables you to create classes containing both state (properties or variables) and behaviour (methods or operations) which can be instantiated into objects. Inheritance allows the contents of one class to be shared by a subclass with a single

There's this belief that if we just reused more code, everything would be better.

Some even go so far as saying that the whole point of object-orientation was reuse - it wasn't, encapsulation was the big thing.

extends keyword. Polymorphism exists when several classes contain the same methods as it then enables the code which calls one of those methods to be reused, using the technique known as Dependency Injection, with an object created from any one of those classes. These three features together provide ways to reuse code that are not available in non-OO languages. This is emphasised in the following definition of OOP which is the only one that I find useful:

Object Oriented Programming is programming which is oriented around objects, thus taking advantage of Encapsulation, Inheritance and Polymorphism to increase code reuse and decrease code maintenance.

Since a major motivation for object-oriented programming is software reuse, this paper describes how classes are developed so that they will be reusable.

Entire books of patterns have been written on how to achieve reuse with the orientation of the day. Services have been classified every which way in trying to achieve this, from entity services and activity services, through process services and orchestration services. Composing services has been touted as the key to reusing, and creating reusable services.

I'm afraid that there is no such thing as an entity service - an object is either an entity or a service. The distinction between the two is explained in Evans Classification of Objects in Object-Oriented Programming as follows:

| Entities | An object whose job is to hold state and associated behavior. The state (data) can be persisted to and retrieved from a database. Examples of this might be Account, Product or User. In my framework each database table has its own Model class. |

| Services | An object which performs an operation. It encapsulates an activity but has no encapsulated state (that is, it is stateless). Examples of Services could include a parser, an authenticator, a validator or a transformer (such as transforming raw data into HTML, CSV or PDF). In my framework all Controllers, Views and DAOs are services. |

| Value objects | An immutable object whose responsibility is mainly holding state but may have some behavior. Examples of Value Objects might be Color, Temperature, Price and Size. PHP does not support value objects, so I do not use them. I have written more on the topic in Value objects are worthless. |

Reuse is a fallacyI agree. The more code you can reuse then the less code you have to write and the quicker you'll get the job done. Duplicating the same block of code over and over again takes more time than writing a single call to a central version of that code.

the actual goal of reuse was: getting done faster.

And here's how reuse fits in to the picture:

- If we were to write all the code of a system, we'd write a certain amount of code.

- If we could reuse some code from somewhere else that was written before, we could write less code.

- The more code we can reuse, the less code we write.

- The less code we write, the sooner we'll be done!

Note that this is not just about reusing code that someone else has written. A competent programmer should be able to create reusable components from his own code. All that it takes is the ability to spot patterns of duplicated or similar behaviour, then to separate the similar from the different so that the similar can be moved to a reusable module and the different can be left in a unique module. Any programmer who can only create software using components that other people have written cannot call themselves a software engineer as they are nothing more than a fitter. An engineer can design, build and repair parts while a fitter can only assemble parts which were created by someone with greater skill. If a part breaks a fitter cannot diagnose it or fix it, he can only replace it.

Fallacy: All code takes the same amount of time to writeI disagree. Programmers do not write code at the same speed as a typist working from a dictaphone. There is a lot of thinking involved, mainly on how to translate the human-readable specification into machine-readable instructions, especially the most efficient machine-readable instructions. Simply writing down the first thing that comes into your head may not be the most efficient or effective. It has to be arranged into a proper structure for ease of maintenance.

Fallacy: Writing code is the primary activity in getting a system doneWriting code is what happens after the analysis and design phases and before the testing, training, documentation and release phases. It is just one activity among many, and just as vital as all the others, so if it is not done, or done badly, then the whole exercise will be an expensive waste of time.

Writing code is actually the least of our worries. We actually spend less time writing code than ...Reducing duplicated code to a single source which can be reused many times is the essence of the DRY principle and is far superior to the alternative. If you take a block of duplicated code and put it into a reusable module which turns out to have bugs then either it was buggy to begin with, in which case the same bugs will exist in every duplicated copy, or you introduced a bug in your reusable module. That is down to poor programming, not the act of creating a reusable module.

Rebugging code.

Also known as bug regressions.

This is where we fix one piece of code, and in the process break another piece of code.

It's not like we do it on purpose. It's all those dependencies between the various bits of code. The more dependencies there are, the more likely something's gonna break.

Dependencies multiply by reuse

It's to be expected. If you wrote the code all in one place, there are no dependencies. By reusing code, you've created a dependency. The more you reuse, the more dependencies you have. The more dependencies, the more rebugging.

That's the trade-off with reusable code - if it's called from 100 places and you introduce a bug, then it will cause those 100 calls to fail. That is down to poor programming. If you replaced those 100 calls with 100 duplicated copies of that code you would have an even bigger job on your hands.

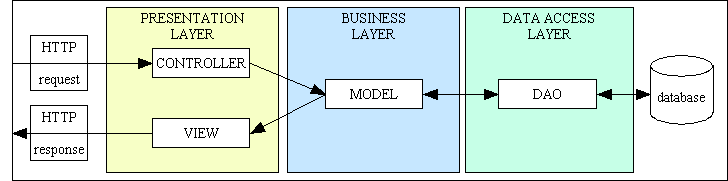

When writing a large enterprise application which has to deal with 100s of transactions, 100s of database tables and 100s of screens, none of today's professional programmers would write monolithic single-tier programs. He would be regarded as inept if he did not utilise some sort of layered architecture such as the 3-Tier Architecture or the Model-View-Controller design pattern, as shown in Figure 1 below. Note that each of the boxes in the diagram is a hyperlink which will take you to a detailed description of that component.

Figure 1 - MVC and 3 Tier Architecture combined

Each module has a single responsibility, thus following the principle of cohesion. This means that you will always have calls from one module to another, which means you will always have coupling and dependencies. The aim is to produce software which exhibits high cohesion and low/loose coupling. Each user transaction will require one of each of the four modules shown above, but it is possible to create unique versions of each without a single line of reusable code. However, a skilled programmer would never do such as thing as part of his skill would be to identify repeating patterns, where code is duplicated, and to replace all that duplicated code with references to a central shared version. Once the shared module has been written, thoroughly tested and debugged, it should be able to be reused any number of times without any problems. The fact that a piece of code is reusable does not increase the likelihood of bugs suddenly appearing. That is usually down to giving it either bad or unexpected data.

The value of (re)useMaking a block of code reusable and then not reusing it seems to me like a waste of effort.

If we were to (re)use a piece of code in only one part of our system, it would be safe to say that we would get less value than if we could (re)use it in more places.

So, what characterizes the code we use in many places? Well, it's very generic. Actually, the more generic a piece of code, the less likely it is that we'll be changing something in it when fixing a bug in the system.

However, when looking at the kind of code we reuse, and the reasons around it, we tend to see very non-generic code - something that deals with the domain-specific behaviors of the system. Thus, the likelihood of a bug fix needing to touch that code is higher than in the generic/use-not-reuse case, often much higher.

A block of code does not increase in value just because you reuse it in more places. The value is the time saved in NOT having to write code that has already been written somewhere else. There may only be a subtle difference between those two viewpoints, but there is a difference.

I agree that in a software system there are basically only two categories of code - either generic (boilerplate) code or non-generic (unique) code. It is only the business logic which has value to the end user, the paying customer, as the generic code has no purpose other than to find a path to where the business logic is located so that it can be processed. This means that it is much more likely that the generic code can be placed in reusable components as writing the same code over and over again would not be very efficient. Higher productivity can only be achieved by reducing the amount of generic/boilerplate code that has to be written so that more time is available for the unique code.

In the structure diagram shown in Figure 1 it should be obvious that the business logic should only exist in one place - the business layer. This means that the components in the other layers should consist of generic/boilerplate code and therefore ripe for large amounts of reusability.

This doesn't mean you shouldn't use generic code / frameworks where applicable - absolutely, you should. Just watch the number of kind of dependencies you introduce.

While reducing the number of dependencies, which is where one module is coupled to another by reason of there being a call from one module to another, is a good idea, another equally important factor is the strength of that coupling.

- The coupling should be as loose as possible so as to avoid the ripple effect when a change in one module forces changes to other modules.

- Attempting to "decouple" the relationship between two modules by inserting an intermediate module between them actually has the opposite effect, as explained in Decoupling is delusional.

So, if we follow the above advice with services, we wouldn't want domain specific services reusing each other. If we could get away with it, we probably wouldn't even want them using each other either.Any object which is domain specific must exist in the business/domain layer, therefore it is an entity, not a service. I do not agree that a domain object should not call a method on another domain object. In a database application, especially an enterprise application, each table in the database is a separate entity with its own structure and business rules, therefore it should have its own concrete class with all shared code inherited from an abstract class. It is perfectly normal for a user transaction to access more than one table, and each of these accesses should be performed through the object which is responsible for that table. The only exception is when reading from multiple tables can be more efficiently executed by performing a JOIN in an SQL SELECT query.

As use and reuse go down, we can see that service autonomy goes up. And vice-versa.Use and reuse has nothing to do with service autonomy which is described in wikipedia as follows:

Service autonomy is a design principle that is applied within the service-orientation design paradigm, to provide services with improved independence from their execution environments.

independent from their execution environmentsmeans that if you have an application which is comprised of multiple domains/subsystems - such as the GM-X Application Suite which was developed using the RADICORE framework - then having a service which can be reused in multiple domains is much better than having a separate version of that service for each domain. This means that as service autonomy goes up then the reuse of that service also goes up, which contradicts that statement.

Luckily, we have service interaction mechanisms from Event-Driven Architecture that enable use without breaking autonomy.According to this wikipedia article an event is nothing more than "a significant change in state". In every application that I have written an object does not change its state unless it has received a request to do so. In a web application that is an HTTP request which can either be a GET or a POST. In a database application that change in state can only be in response to a request to perform an insert, update or delete operation. Provided that the code for each of those operations has been properly written, and regardless of whether that code is reused or not, the operation should be successful regardless of where the request originated.